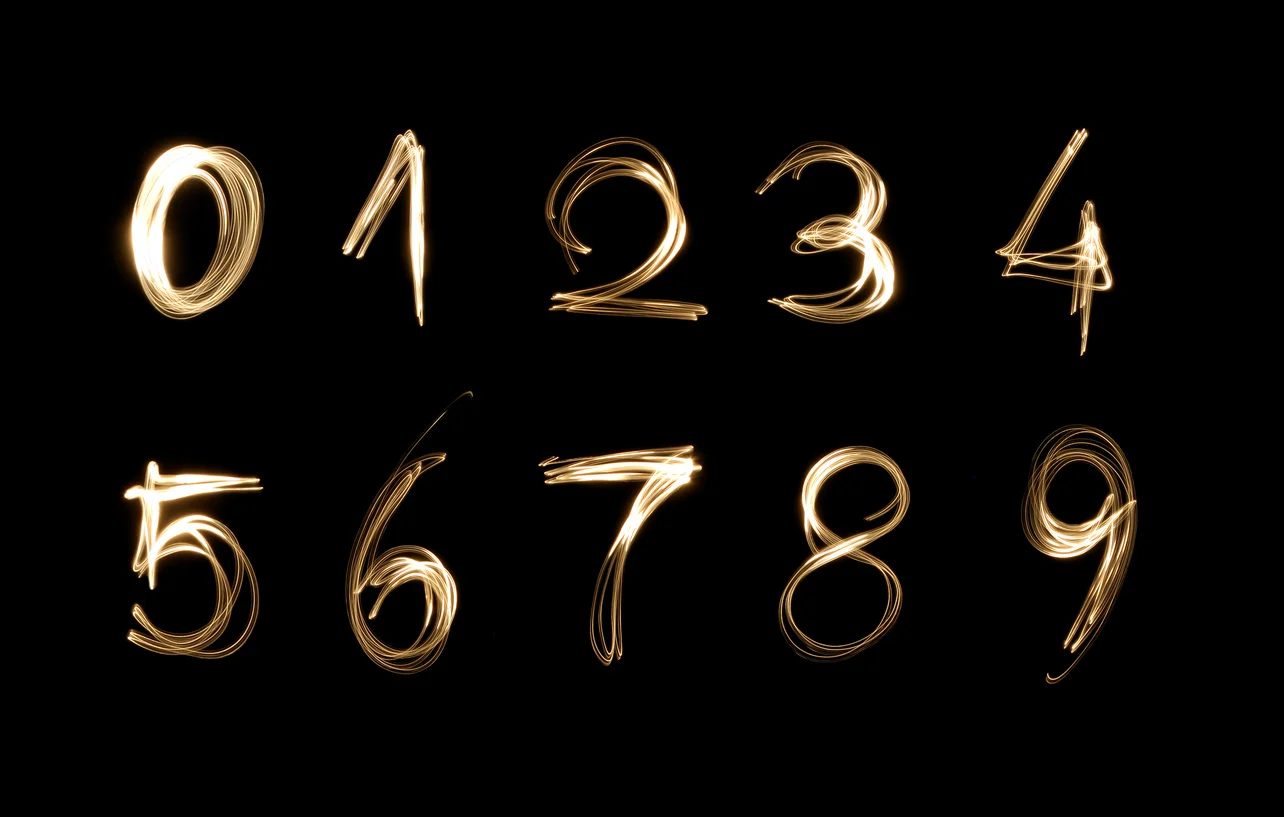

Can you read my handwriting? Adventures in Image Recognition

Hosted by Yann LeCun, the MNIST database is a series of handwritten digits, with a training set of 60,000 examples and a test set of 10,000 examples. It consists of a series of examples that have been normalized by size and centered. It's also hosted on Kaggle as part of their 'Getting Started' set of problems and as part of Siraj Raval's #100DaysofMLCode I had a go at tackling it as part of Day 3 of my own journey on that challenge.

It's a classic entry level dataset for those seeking to cut their teeth on image and pattern recognition techniques. Here, we'll run a series of algorithms on the data to compare performance.

So what do we have in the dataset? The dataset consists of a series of images of handwriting that are 28 pixels by 28 pixels. Here, the data has been pre-prepared by being turned into a one-hot encoded array of 0s and 1s to denote whether a pixel is black or white. From this our model can learn and classify our images. So we are also looking here at a classification problem, which should give us some hints in terms of model selection.

We can even reconstruct the image by taking an example, and loading it into a numpy array and reshaping it so that it is two dimensional (28 x 28 pixels). Then we can plot it with matplotlib.

Our strategy for dealing with the classification problem included creating a support vector classifier via sklearn. A support vector classifier works by identifying a hyperplane that can divide two classes. Support vectors are the data points positioned as such that if they were moved, the hyperplane itself would move.

The further from these datapoints and the hyperplane other datapoints lie, the more confident we can be about the class to which those datapoints belong. So ideally we want our datapoints to be as far away from the hyperplane as possible.

But what if our true labels are intermingled or not linearly separable? SV classifiers permit some margin of error. Cleverly called 'margins'. The classifier will select a hyperplane with the maximum margin between the hyperplane and any points in the training set, giving the greatest chance possible that on new data, the correct class will be selected.

We can manipulate a parameter 'c' which is a penalty term that applies a cost for each misclassified training example. So for large values of C, the model will choose a smaller margin hyperplane if that does a good job of getting all the training points classified correctly. A small value of C will cause the optimizer to look for a larger margin, and thus it will tolerate more data points falling on the 'wrong' side of the hyperplane even if that means misclassifying some examples.

SVM commonly performs well on image recognition challenges, and particularly on colour-based recognition.

The original images are grayscale, as the image reproduction above showed. This means that it is unlikely that our data will be linearly separable. We have two possible tactics to address this. We can reduce the dimensionality of our data by changing our images to black and white by handily simply replacing any pixel value > 0 with 1 and leaving all our existing 0s intact.

Alternatively, we can manipulate the kernel parameter of SVC. This is a means by which we can separate data that is not otherwise separable in 2 dimensional space. If we go up to a higher dimensionality space via kernelling, we can use a plane rather than a line to separate our data.

We've chosen to go down the polynomial kernel route, but we've included the b/w approach as an alternative route for comparison.

The score of the polynomial kernel on the training set was approximately 0.976

The alternative approach we could have taken was to alter the training and test sets to reduce the dimensionality of our dataset by making the images black and white instead of grayscale via a simple transform as previously described.

Transform the digits from grayscale to black and white via a simple transformation that changes any pixel value > 1 to 1 and leaves all 0s stet.

But the 'black and white' SVC classifier when fit and scored performs worse on the training set compared to the polynomial kernel.

The end submission uses the polynomial kernel and attains 0.975 on the test set. If you want to view my notebook, it's available on my Kaggle Profile